En un ejercicio de machine translation anterior hemos visto la parte 1 de esta práctica, correspondiente a la carga de datos. En este apartado seguiremos con la realización del mismo ejercicio en su segunda fase: la de preprocesado y entrenamiento.

Ejercicio de machine translation

Preprocesado

#Ejercicio de machine translation

def tokenize (x):

" " "

Tokenize x

:param x: List of sentences / strings to be tokenized

:return: Tuple of (tokenized x data, tokenizer used to tokenize x)

" " "

t = Tokenizer ( )

#fit the tokenizer on the documents

t.fit_on_texts (x)

return t.texts_to_sequences (x), t

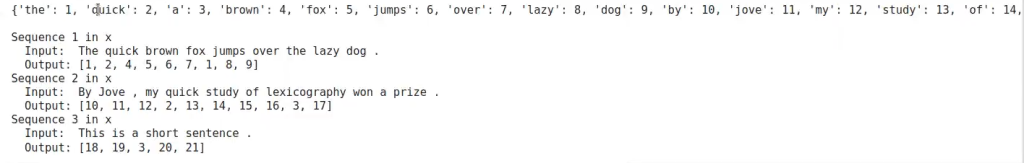

#Tokenize Example output

text_sentences = [

'The quick brown fox jumps over the lazy dog .',

'By Jove, my quick study of lexicography won a prize .'

'This is a short sentence .']

text_tokenized, text_tokenizer = tokenize (text_sentences)

print (text_tokenizer.word_index)

print ( )

for sample_i, (sent, token_sent) in enumerate (zip (text_sentences, text_tokenized)):

print ('Sequence { } in x'.format (sample_i + 1))

print ('Input: { }'.format (sent))

print ('Output: { }'.format (token_sent))

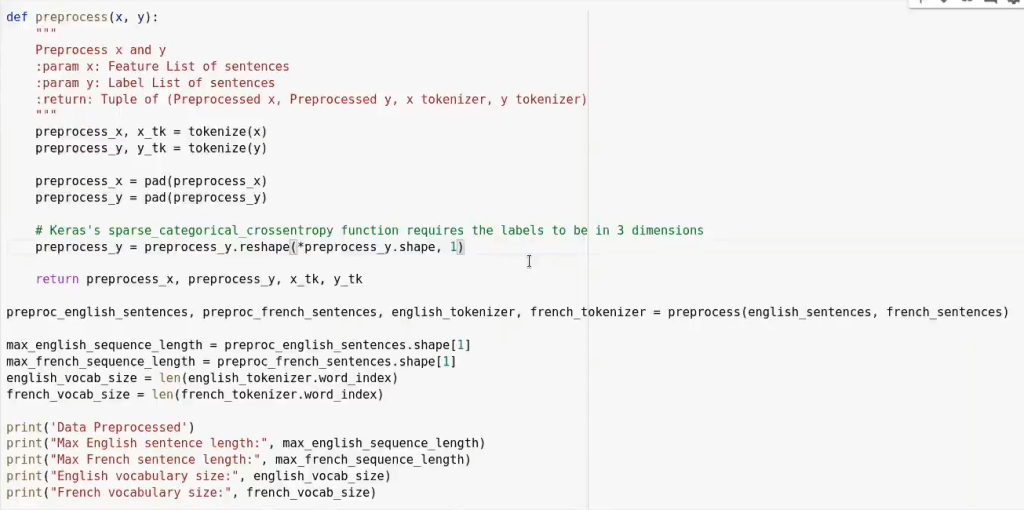

#Ejercicio de machine translation

def pad (x_length = None):

" " "

Pad x

:param x: List of sequences.

:param length: Length to pad the sequence to. If None, use length of longest sequence in x.

:return: Padded numpy array of sequences

" " "

if length is None:

length = max ([len (sentence) for sentence in x])

return pad_sequences (x, maxlen = length, padding = 'post')

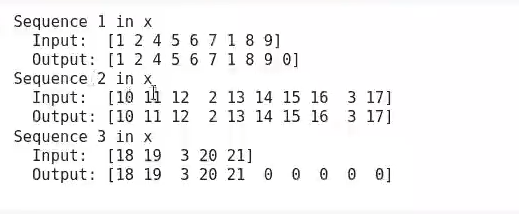

#Pad Tokenized output

test_pad = pad (text_tokenized)

for sample_i, (token_sent, pad_sent) in enumerate (zip (text_tokenized, test_pad)):

print ('Sequence { } in x'.format (sample_i + 1))

print ('Input: { }'.format (np.array (token_sent)))

print ('Output: { }'.format (pad_sent))

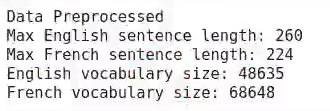

Entrenamiento

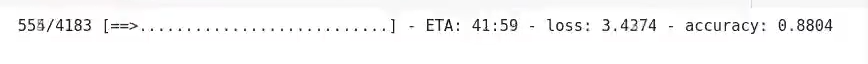

Primero usaremos una implementación de RNN many to many:

#Ejercicio de machine translation

def logits_to_text (logits, tokenizer):

" " "

Turn logits from a neural network into text using the tokenizer

:param logits: Logits from a neural network

:param tokenizer: Keras Tokenizer fit on the labels

:return: String that represents the text of the logits

" " "

index_to_words = {id: word for word, id in tokenizer.word_index.items ()}

index_to_words [0] = '<PAD>'

return ' '.join ([index_to_words [prediction] for prediction in np.argmax (logits, 1)])

print (' 'logits_to_text' function loaded. ')#Ejercicio de machine translation

import tensorflow as tf

gpu_devices = tf.config.experimental.list_physical_devices ('GPU')

for device in gpu_devices:

tf.config.experimental.set_memory_growth (device, True)#Ejercicio de machine translation

#Reshaping the input to work with a basic RNN

tmp_x = pad (preproc_english_sentences, max_french_sequence_length)

tmp_x = tmp_x.reshape ((-1, preproc_french_sentences.shape [-2], 1))

learning_rate = 1e - 3

input:seq = tf.keras.layers.Input (tmp_x.shape [1:])

rnn = tf.compat.v1.keras.layers.CuDNNLSTM (100, return_sequences = True) (input_seq)

#french_vocab_size

logits = tf.keras.layers.TimeDistributed (tf.keras.layers.Dense (french_vocab_size)) (rnn)

model = tf.keras.models.Model (input_seq, tf.keras.layers.Activation ('softmax') (logits))

model.compile (loss = tf.keras.losses.sparse_categorical_crossentropy, optimizer = tf. keras.optimizers.Adam (learning_rate), metrics = ['accuracy'])

model.fit (tmp_x, preproc_french_sentences, batch_size = 64, epochs = 1, validation_split = 0.2)

#Print prediction (s)

print (logits_to_text (model.predict (tmp_x [:1]) [0], french_tokenizer))

Añadimos una capa de embedding previa a la capa de GRU:

#Ejercicio de machine translation

learning_rate = 1e - 3

input_seq = tf.keras.layers.Input (tmpX-shape [2:])

embedding = tf.keras.layers.Embedding (input_dim = english_vocab_size, output_dim = 256 (input_seq)

rnn = tf.keras.layers.GRU (100, return_sequences = True) (embedding)

#french vocab size

logits = tf.keras.layers.TimeDistributed (tf.keras.layers.Dense (french_vocab_size)) (rnn)

model = tf.keras.models.Model (input_seq, tf.keras.layers.Activation ('softmax') (logits))

model.compile (loss = tf.keras.losses.sparse_ categorical_ crossentropy, optimizer = tf. keras.optimizers.Adam (learning_rate), metrics = ['accuracy'])

model.fit (tmp_x, preproc_french_sentences, batch_size = 64, epochs = 1, validation_split = 0.2)

#Print prediction (s)

print (logits_to_text (model.predict (tmp_x [:1]) [0], french_tokenizer))Una vez aquí, podríamos seguir optimizando más este ejercicio por medio de la inserción de un modelo LSTM.

Sabemos que el Big Data tiene muchísimas vertientes y, por tanto, hay infinidad de temas sobre los cuales puedes aprender. Échale un vistazo al temario del Big Data, Inteligencia Artificial & Machine Learning Full Stack Bootcamp y descubre esta formación íntegra e intensiva. ¡No dudes en pedir más información y da el paso que impulsará tu futuro!